Artificial Intelligence (AI) might sound surreal to some people but, in fact, it is already all around us. Recommendation systems on Netflix, Siri, facial recognition, Google Translate – are all examples of AI. Briefly, AI is the simulation of human intelligence processes by computer systems. AI algorithms train themselves from data and learn to conduct a specific task that way.

There are a lot of ethical questions arising with the employment of AI, including automation, privacy concerns, and so on. But at least AI is objective–or is it?

Turns out that some AIs have shown sexist behaviour before. For example, Amazon had an AI recruiting tool that showed bias against women. The model was trained with data from resumes submitted to the company over a 10-year period. Most resumes came from men reflecting how male-dominated the tech industry is. As a result, Amazon’s system trained itself that male candidates were preferable and started penalizing resumes that included the word “women’s”.

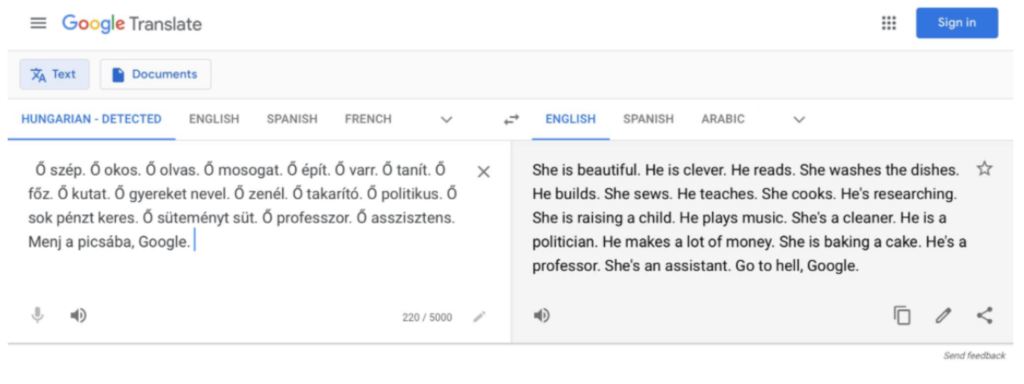

Furthermore, Google Translate has been previously accused of stereotyping based on gender. Hungarian language, for instance, is gender-neutral and has no gendered pronouns. However, Google Translate chooses genders itself when translating from Hungarian to English. The image below clearly shows gender stereotypes.

AI learns from data. In the case of translation, AI is exposed to huge amounts of textual data from which it learns to recognise patterns. Ever-growing datasets allow access to hundreds of billions of words from around the web. That being said, it is clear that AI’s bias comes from bias in data, which originally comes from people’s bias. Google Translate predicting a pronoun ‘she’ before the phrase ‘washes the dishes’ only reflects the stereotype existing in our society.

Of course, sexism encoded in AI algorithms, which are so widely used today, is unacceptable. What can be done about it? Since AI learns from data, it seems most reasonable to adjust datasets so that they are balanced and unbiased. For example, maybe equal amounts of men’s and women’s resumes in Amazon’s dataset for their hiring tool would help solve the problem.

Increased ender equality in the tech field can also help tackle the issue. A famous concern in the field of AI is how most virtual assistants, such as Alexa, Siri and so on, are modelled on women. They have female voices, names, etc. They also often respond to abuse and harassment from users in a kind and playful manner.

At the same time, only 12% of AI researchers are women. Perhaps having more women working in the field would help raise developers’ awareness of the issue and make AI more impartial.

Overall, it is very interesting and disappointing how artificial intelligence reflects gender stereotypes existing in humans’ minds. It yet again proves that the problem of gender discrimination still exists and needs to be addressed.